The Power of Synthetic Data as a Source of AI Training Data for Financial Services

Explore the potential of synthetic data in helping financial services firms use AI to meet critical services objectives.

Artificial Intelligence (AI) is quickly changing the financial services industry. Financial institutions are using AI to improve efficiency, enhance the customer experience, and to be able to compete in the market.

AI Use Case Examples in Financial Services

- Determine good or bad credit

- Detect and prevent fraud

- Customize financial products

- Simulate real-world scenarios

- Personalize customer interactions

- Optimize trading strategies

Capital One Financial, for example, has publicized how they are applying AI and machine learning to improve their fraud detection systems. This has reduced false positives, improved detection rates, lowered costs, and increased customer satisfaction.

However, up to 80% of AI/ML project timelines are spent on data preparation.

Data is a Critical Success Factor to Ensure Accuracy in AI

The accuracy and effectiveness of any AI-driven application is directly dependent on the accuracy and completeness of the data used to train, validate, and test its machine learning algorithms. So much so, that training data has been called the programming language for AI.

The challenge for AI developers is to access, collect, evaluate, and prepare training data that meets this very demanding quality standard. They must ensure the datasets provisioned for training their models mitigate the following risk factors.

- Data Privacy & Security: Deploying AI in the highly regulated financial sector requires strict alignment with regulatory frameworks like General Data Protection Regulation (GDPR) and California Consumer Privacy Act (CCPA), as well as implementing robust governance structures and security measures to protect customer data and maintain trust.

- Algorithmic Bias: When AI systems are trained on existing financial data, especially small data samples, they risk perpetuating existing biases and discriminatory patterns, leading to unfair outcomes for certain customer segments. Financial institutions must proactively identify and mitigate algorithmic bias to ensure equitable treatment and avoid reputational and legal risks.

- Data Accuracy & Completeness: Incorrect and missing data values can lead to incorrectly trained AI algorithms which increases the probability of false positives, flawed predictions, and incorrect risk assessments. And complex training data must also maintain accurate data relationships, so called “referential integrity,” to ensure that the training data is accurate and useful.

- Sufficient Volume: It’s well understood that AI benefits from large volumes of training data. And not just “positive” data for machine learning; the signal for what “fraud” looks like must be able to be amplified to give the AI algorithms sufficient data examples to learn from and minimize false positives.

- Evidence for Audits: Financial institutions must strive to develop more interpretable AI models and provide clear explanations for AI-driven decisions to stakeholders, regulators, and customers. This requires traceability as to how the data was designed.

AI Training Data Solutions

Here are four alternative solutions for machine learning training data:

- Copy and “mask” production data

- Use Generative AI (Gen AI) tools to generate data

- Use synthetic data generation tools to replicate production data

- Use GenRocket to design and generate any volume & variety of synthetic data

Option 1: Copy and Mask Production Data

Copying and masking production data is often the first consideration for training data. However, it has at least three drawbacks.

* Achieves functionality

** Doesn’t achieve functionality

- Data Security* – Data is secure. However, depending on the security posture, masking algorithms can potentially be reverse-engineered, and still compromise sensitive information.

- Data Bias** – Production data often contains inherent biases that can skew AI model training.

- Data Accuracy & Completeness** – Gaps in production data can lead to incomplete training datasets.

- Sufficient Volume** – Limited production data may not provide enough volume for comprehensive AI training.

- Evidence for Audits* – Masked production data can be used as evidence during audits.

Masked production data may not adequately address data privacy concerns. Masking uses an algorithm which can be reverse-engineered so even after masking sensitive information, there is still a risk of data leakage or re-identification. This can lead to compliance issues and damage customer trust.

Moreover, production data often lacks the variety needed for comprehensive AI training and testing. It may contain biases and fail to represent certain scenarios adequately. Additionally,

production data frequently suffers from various data quality issues such as missing, inconsistent, or inaccurate values.

Option 2: Use Gen AI to Generate Synthetic Data

Using Gen AI is being considered for machine learning training data, however, it has at least three drawbacks.

* Achieves functionality

** Doesn’t achieve functionality

- Data Security* – Synthetic data generated by GenAI tools maintains data privacy and security.

- Data Bias** – GenAI-generated data may inherit biases present in the source production data.

- Data Accuracy & Completeness** – GenAI tools can produce inaccurate data points (“hallucinations”) and may not ensure referential integrity.

- Sufficient Volume* – GenAI tools can generate large volumes of synthetic data for AI training.

- Evidence for Audits** – GenAI-generated data cannot be reliably reproduced for audit purposes.

Some developers have turned to GenAI tools to generate synthetic training data as a way to mitigate the risks associated with using production data. While these tools can create new

datasets by imitating real-world patterns, they may not always be suitable for training algorithms in the financial services industry.

One major concern is the accuracy and reliability of the generated data. GenAI models are susceptible to hallucinations, producing plausible but incorrect data points. In the financial

services context, such inaccuracies can lead to serious consequences, like flawed risk assessments or undetected fraudulent transactions.

Furthermore, GenAI models can generate biased or inconsistent data, especially if the training data itself is biased or fails to fully represent critical scenarios. This can result in AI models

learning and amplifying these biases, leading to inaccurate or unfair predictions.

Another challenge is maintaining referential integrity between key data elements, which is essential for data accuracy in real-world scenarios where data comes from multiple sources.

GenAI tools do not guarantee this referential integrity in the data they generate.

Option 3: Use Synthetic Data Generation Tools to Replicate Production Data

A number of synthetic data generation tools vendors have entered the market. These tools typically work by profiling a production dataset and generating a statistically accurate replica of the data, however, they have at least three drawbacks.

* Achieves functionality

** Doesn’t achieve functionality

- Data Security* – Synthetic data generation tools create secure, privacy-compliant datasets.

- Data Bias** – Replicating production data perpetuates existing biases in the synthetic dataset.

- Data Accuracy & Completeness** – Synthetic data replication may not address gaps or inconsistencies in the source data.

- Sufficient Volume** – Synthetic data replication may not provide sufficient volume for comprehensive AI training.

- Evidence for Audits* – Replicated synthetic data can serve as evidence during audits.

While replicating production data with synthetic data generation tools may seem like a viable approach, it has a significant drawback. These tools reproduce not only the data but also all the quality issues present in the original dataset, including inconsistencies, gaps and biases.

In the financial services industry, these limitations can have serious implications. If the production data contains inconsistencies and biases, the synthetic data will inherit them. Training an ML model with invalid data can undermine its accuracy and produce costly false positives or negatives. So while this approach reduces security risks, it presents the same data quality challenges as using masked production data.

Synthetic data generated by these tools also often lacks completeness, as it relies on the quality of the source production data. These tools typically lack the ability to design new data that does not exist in production such as data that amplifies the signal of what fraud data looks like. While these tools help address data privacy concerns, they aren’t the best solution for machine learning training data.

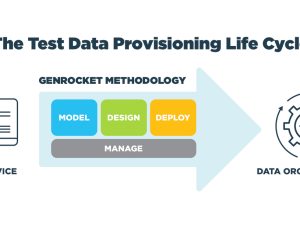

Option 4: Use GenRocket to Design & Generate Any Volume & Variety of Synthetic Data

GenRocket has spent over a decade refining a patented, enterprise-class synthetic data platform that can design any volume and variety of data. This platform has many advantages over the other available solutions in the market.

* Achieves functionality!

- Data Security* – GenRocket generates secure, privacy-compliant synthetic data.

- Data Bias* – GenRocket allows data to be designed to minimize or eliminate bias.

- Data Accuracy & Completeness* – GenRocket enables the creation of accurate, complete datasets with maintained referential integrity.

- Data Consistency* – GenRocket ensures consistent synthetic data generation across datasets.

- Sufficient Volume* – GenRocket can generate any required volume of synthetic data.

- Evidence for Audits* – GenRocket’s synthetic data is fully reproducible for audit purposes.

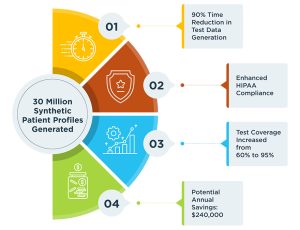

GenRocket provides a rules-based synthetic data platform that allows any volume and variety of machine learning data to be designed. The platform fully addresses the challenges of data quality, volume, security, referential integrity, and transparency that are so critical in the financial services industry.

It’s a modular and extensible framework that enables data scientists to design the exact machine training data needed for accurate model training, validation, and testing.

The platform can accurately generate billions of records, regardless of the size of the source dataset. And “fraud” data signals can be designed and amplified even if there are no data examples to copy.

GenRocket’s synthetic data platform is a powerful tool designed for financial services organizations looking to deploy AI responsibly and effectively.

Partner with GenRocket for AI Training Data

AI and generative models can greatly improve financial services, sparking innovation and delivering value to customers. GenRocket’s synthetic data platform is a powerful solution for financial institutions looking to harness AI while mitigating risks.

Financial institutions are moving into an AI-driven future. By partnering with GenRocket, they can make full use of AI’s capabilities and ensure its responsible use. GenRocket’s platform helps these companies confidently lead into a new era of growth and innovation.

MACHINE LEARNING CASE STUDY: GenRocket’s Synthetic Data Accurately Trains AI-Assisted Tax Fraud Detection System

GenRocket’s synthetic data not only met the volume and precision needed but also allowed the software to be tested thoroughly, minimizing the potential for false positives or negatives in fraud detection. This partnership enabled the AI system to adapt to new tax evasion methods continually and can be expanded to other regions and tax types, proving invaluable in maintaining governmental revenue integrity.

You can view the full case study here: GenRocket Tax Fraud Detection System Case Study