The Rise of the Machines: GenRocket Synthetic Training Data for Machine Learning

Some analysts believe that the market for machine learning may surpass $9 Billon USD this year. The surge in growth in the ML market is exponential as new avenues for software development and applications emerge. With this surge in new applications comes the need for massive volumes of data to train ML models to perform at a high level of accuracy and consistency. Here, we present an overview of the role synthetic data can play in training machine learning algorithms. And we’ll identify the best applications for GenRocket’s Synthetic Test Data Automation platform in this rapidly growing industry.

Machine Learning Is Ubiquitous

In global enterprises, machine learning technology is being operationalized for almost every aspect of the business. The potential applications of AI and ML are both ubiquitous and diverse, here are some of the more high-profile enterprise-class use cases:

- Bank/credit fraud

- Tax collection

- Insurance fraud

- Health monitoring

- Intrusion detection

Each application of machine learning requires its own unique set of algorithms that must be trained, validated, and tested and using a huge volume of training data. Quality training data is the key to accurate model performance, whether it’s being used to forecast future events based on the patterns of past events, or to identify anomalies in a transaction data stream that must be identified and addressed in real-time.

Machine Learning Requires Plenty of Data

According to the Columbia University School of Engineering, the goal of machine learning is to “make machines learn from experience.” Machines “learn” best when they have plentiful examples to study and clear, consistent rules to follow. That’s why machine learning tools work well for tasks like a grammar and spelling editor in a word processing program since the rules of both grammar and spelling are clear and (mostly) consistent. The same goes for machine learning programs that examine forms and documents submitted to a system, such as tax returns or insurance reimbursement requests. The rules are clear for what information goes into each form (text or a number) and how it is processed, routed, or calculated.

As machine learning algorithms process additional data inputs, the program continues to learn and become better at its programmed function. This is the key to machine learning: copious amounts of data received over time, with enough variables to help the program make predictions or understand anomalous events and recommend corrective actions.

Training Data: The Programming Language of AI and ML

The profile of the data used to train any ML model is so essential to its successful operation, it can almost be thought of as a programming language. Provisioning quality training data is the most important and also the most difficult part of deploying an ML system. The first challenge is collecting the data, as it may come from multiple sources and contain sensitive information that must be thoroughly anonymized for use in a development environment.

But that’s not the only challenge. To deliver the data quality required for accurate model training, it must go through a meticulous data preparation process. Data that has been copied and masked from a production environment often contains missing, duplicate, inaccurate or inconsistent values. Data accuracy and integrity must be ensured before it can be used for training. As a result, sourcing quality training data from production can take up to 80% of the total project time.

Production Data Presents Several Challenges:

- Extensive preparation needed to ensure data quality

- Augmented to provide sufficient data quantity

- Consolidated from multiple data sources

- Transformed to the required data format

- Masked to remove sensitive or private information

- No historical data for training new applications

Sourcing quality training data for machine learning can take up to 80% of the total project timeline.

Because of the huge volume of training data that’s required, developers are increasingly looking to synthetic data to accelerate the process. Synthetic data is not real data, but realistic data that looks just like the original data in every way. And it’s not only secure, but it can also be generated in high volume.

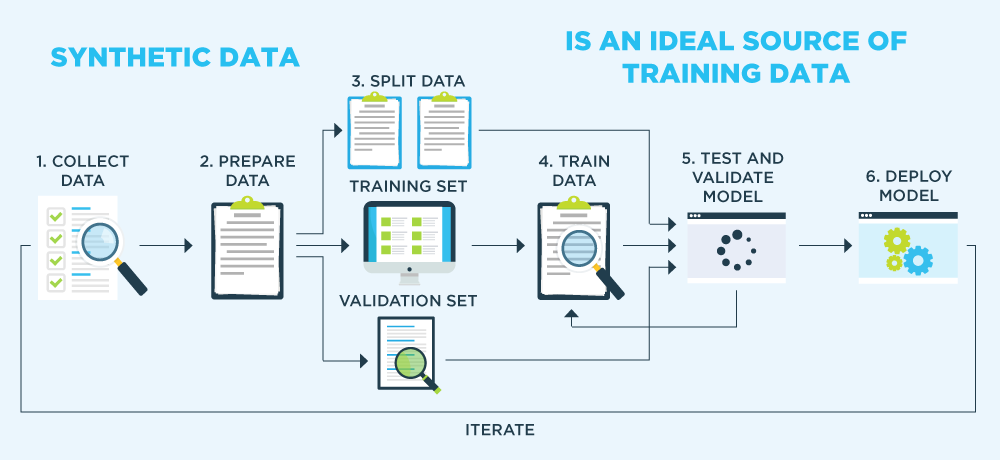

After training data is collected and prepared for use, the dataset is split so that most of it is used to train the model, some of it is used to validate the model, and the rest of it is used to test the operation of the software application. After the model is deployed, many iterations of this process must be repeated to refine and continuously improve the accuracy of model’s performance.

The Accuracy of any ML Model is Based on the Quality of its Training Data

The problem with most commercial synthetic data solutions is they generate training data by examining the statistical profiles of real production data. That means a synthetic data replica will inherit all of the quality problems, biases, and limitations on data variety of the original production data. This can be problematic when, for example, a model must be trained to detect anomalies that may only occur with a frequency of 0.001% in the real world. However, that underrepresented anomaly might represent a fraudulent transaction worth millions of dollars to a financial institution.

Choosing the Right Synthetic Training Data Approach

When it comes to generating synthetic training data, there are two fundamental approaches:

- Generate training data based on deep learning algorithms that closely examine production data and then reproduce a statistically equivalent synthetic data copy.This approach is often used for unsupervised learning of ML models and is appropriate when the patterns and predictors of future events are not known. The developer does not need to know the distribution profile of the training data as it will be learned directly from the production data source.

An example of this approach is training an algorithm used to predict a buyer’s product preferences when designing a personalized marketing campaign or an e-commerce product recommendation engine. The model’s predictions are based on the buyer’s purchase history as well as learned behaviors associated with the buyer’s demographic segment.

- Generate training data based on a data model and rules that define the statistical distribution profile required to accurately train the ML model.This approach is often used for supervised learning of ML models and is appropriate when data can be classified (labeled) and the distribution profiles required for training the algorithm are known to the developer, or someone with domain knowledge of the application environment.

An example of this approach is an anomaly detection system, where an algorithm must identify anomalous (outlier) events that might represent illegal or fraudulent behavior. Because the outlier conditions are known, they can be defined by rules and synthetic data can be generated to simulate a pre-determined data profile.

GenRocket’s Synthetic Training Data Solution

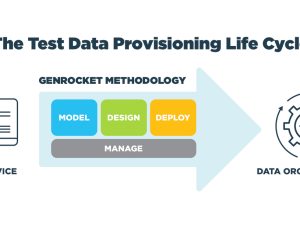

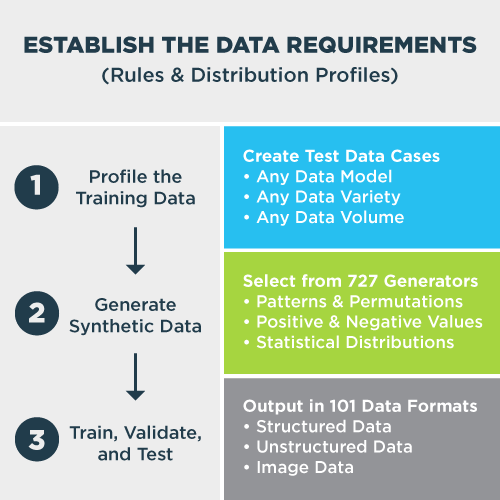

The second approach, rule-based synthetic data generation, is used by GenRocket to produce training data that offers the highest possible data quality, in any volume, and in any output format required by the application. With GenRocket, a data model is used as a blueprint for the data structure and is combined with rules for producing synthetic training data having a pre-determined statistical data profile.

This approach enables the generation of synthetic data with much higher quality than training data produced by deep learning as it provides control over the volume and variety of data needed to train the model. It does, however, require knowledge of the application domain and training data profile.

Here is a summary of the GenRocket ML Training Data Solution:

Synthetically Generated Training Data

- Fully automates the delivery of synthetic data

- Based on predefined rules and statistical profiles

- Simulates any data model with referential integrity

- With any variety or volume of data (billions of rows)

- Ensures data quality (accurate, consistent & complete)

- 100% secure & compliant with all data privacy laws

- Available if there’s no data or to augment existing data

Use GenRocket to generate any volume & variety of quality synthetic data in any data format in a matter of minutes.

Because GenRocket generates training data based on pre-configured instruction files called Test Data Cases, they can be used and re-used indefinitely. Modifications to existing Test Data Cases can be made to generate positive and negative test data, combinations and permutations, structured and unstructured data formats, and version-controlled data for regression testing.

This level of control over data volume and variety allows developers and testers to simulate more conditions that can result in false positives or false negatives – a problem often associated with training data sourced from production and used for unsupervised learning. By training and testing for false positive and negative results, they can be anticipated and avoided.

And for greenfield applications that simply have no data to work with, GenRocket provides a platform for simulating any category of data based solely on a data model and a statistical data profile, and at any scale. GenRocket can synthetically generate one billion rows of complex training data with percentages and calculations in less than two hours.

GenRocket’s powerful data design features enable machine learning engineers and testers to define training data for multiple use cases, store them, adapt them, and run many test files of synthetic data so they can test their machine learning programs and get them to market quickly. GenRocket is uniquely poised to produce the volume, variety, and velocity of synthetic training data to meet today’s market demands.